Similar to pandas, petl lets the user build tables in Python by extracting from a number of possible data sources (csv, xls, html, txt, json, etc) and outputting to your database or storage format of choice. Petl is a Python package for ETL (hence the name ‘petl’). Consider Spark if you need speed and size in your data operations.

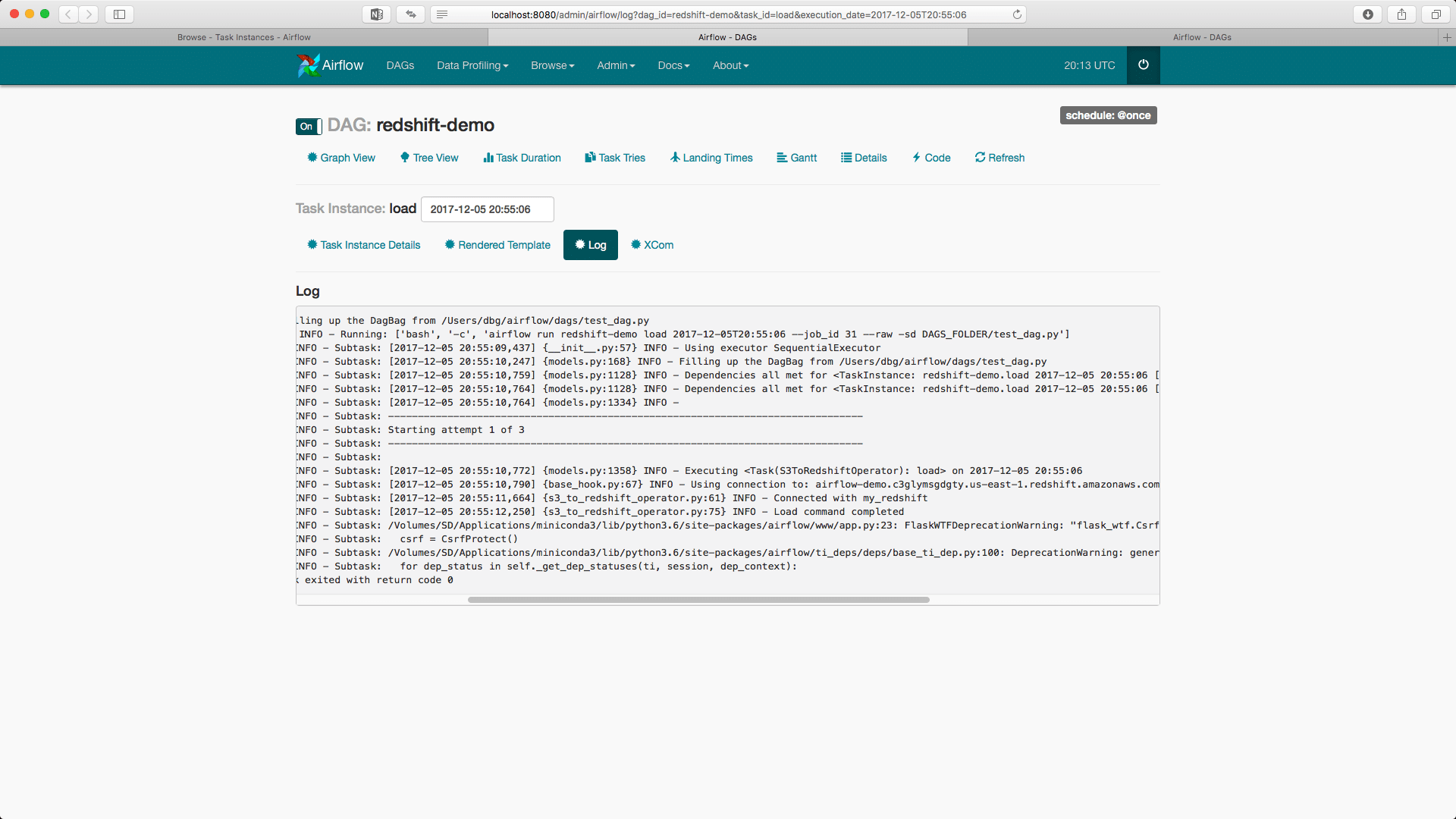

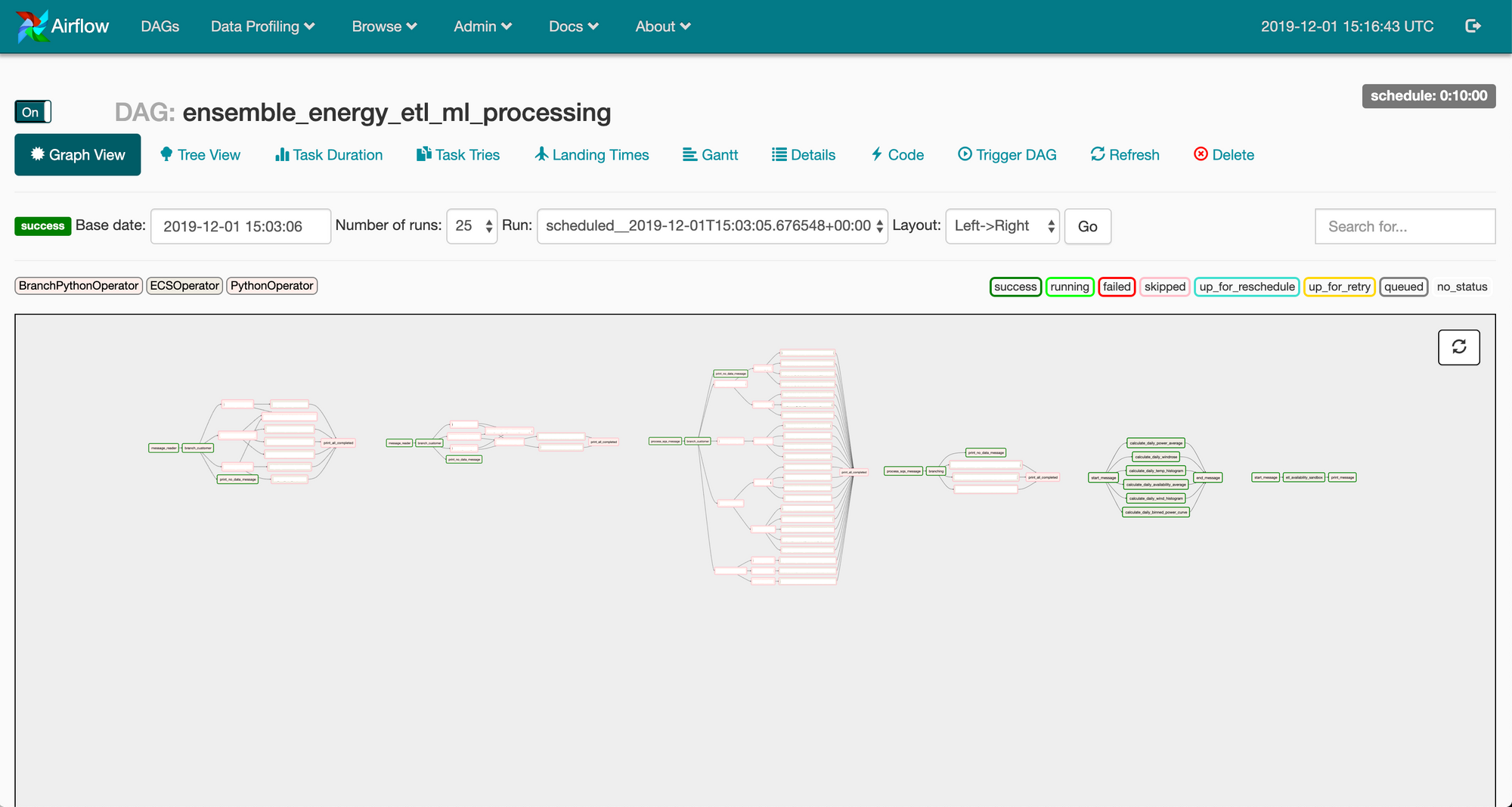

AIRFLOW ETL CODE

It scales up nicely for truly large data operations, and working through the PySpark API allows you to write concise, readable and shareable code for your ETL jobs.

Spark has all sorts of data processing and transformation tools built in, and is designed to run computations in parallel, so even large data jobs can be run extremely quickly. Learn more skills from ETL Testing Training SparkĪs long as we’re talking about Apache tools, we should also talk about Spark! Spark isn’t technically a python tool, but the PySpark API makes it easy to handle Spark jobs in your Python workflow. Airflow is highly extensible and scalable, so consider using it if you’ve already chosen your favorite data processing package and want to take your ETL management up a notch.

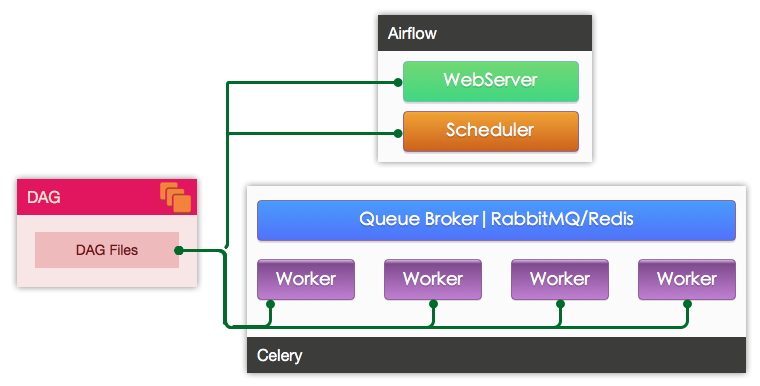

It comes with a handy web-based UI for managing and editing your DAGs, but there’s also a nice set of tools that makes it easy to perform “DAG surgery” from the command line. Airflow’s core technology revolves around the construction of Directed Acyclic Graphs (DAGs), which allows its scheduler to spread your tasks across an array of workers without requiring you to define precise parent-child relationships between data flows. While it doesn’t do any of the data processing itself, Airflow can help you schedule, organize and monitor ETL processes using python. Originally developed at Airbnb, Airflow is the new open source hotness of modern data infrastructure. Either way, you’re bound to find something helpful below. Some of these packages allow you to manage every step of an ETL process, while others are just really good at a specific step in the process. We’ve put together a list of the top Python ETL tools to help you gather, clean and load your data into your data warehousing solution of choice. Luckily for data professionals, the Python developer community has built a wide array of open source tools that make ETL a snap. Any successful data project will involve the ingestion and/or extraction of large numbers of data points, some of which not be properly formatted for their destination database. ETL is the heart of any data warehousing project.

0 kommentar(er)

0 kommentar(er)